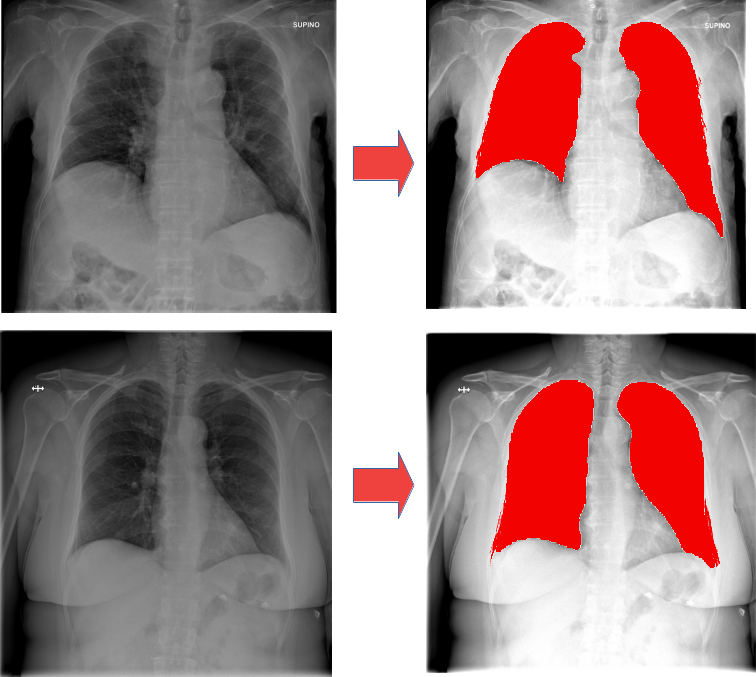

Lung Sementation on Chest X-Ray images with U-Net

The automated analysis of Chest X-Ray images may be an important tool to support physicians.

The automated analysis of Chest X-Ray images may be an important tool to support physicians.

The first necessary step of CXR analysis is always the segmentation of lungs.

As an example, it is useful in the evaluation of CXR of patients with COVID-19 pneumonia.

In this exercise, we propose to work on this topic:

- The core is the training of a U-Net, which is an encoder-decoder structure with skip connections, in order to perform the lung segmentation on CXR.

- We will use two public datasets of CXR images, consisting of 750 patients. The datasets include images along with the manual segmentations to be used as labels.

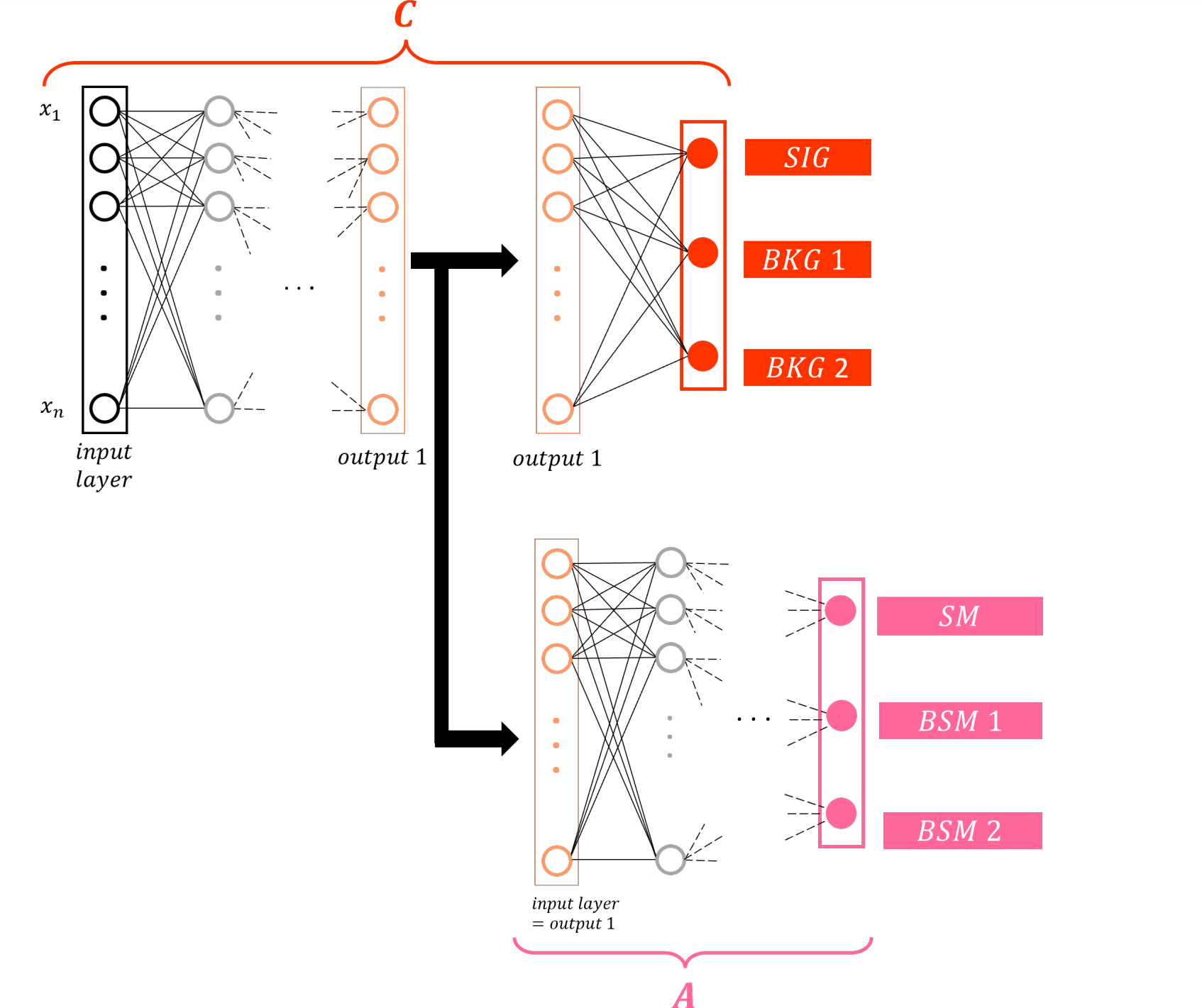

Domain Adaptation to train model-independent classifiers in High Energy Physics

Training Machine Learning classifiers to distinguish signal from background in High Energy Physics is usually based on simulated datasets, at least for the signal component. The simulation of signal relies on theoretical models that often include hypotheses that have not been completely validated, yet. As a consequence, an implicit bias is introduced in the classifier performance that will differ from model to model.

Domain adaptation can be used to mitigate the dependence of the trained classifier on the theoretical model adopted for training, forcing the classifier Neural Network to actively ignore the information necessary to distinguish different theoretical models.

In this exercise we will implement a simplified version of the method to simulated LHC datasets, discussing the most interesting features and pitfalls of the method.

(figure from arXiv:2207.09293)

(figure from arXiv:2207.09293)

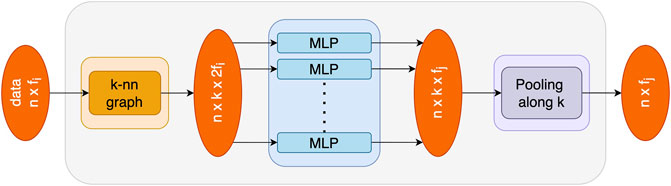

Graph Neural Networks and Transformers

Graph Neural Networks are a class of algorithms designed to apply the principles of Deep Learning to non-tabular data. Graphs, representing relations between objects, define the data format of the training and test sets.

Graph Neural Networks are a class of algorithms designed to apply the principles of Deep Learning to non-tabular data. Graphs, representing relations between objects, define the data format of the training and test sets.

Transformers are another advanced architecture largely employed in Natural Language Processing, introducing the concept of self-attention to provide a context to the Neural Network evaluation.

In this exercise we will experiment with these two advanced architectures, while suggesting example applications to reconstruction and analysis tasks in High Energy Physics.

(figure from Front. Phys., 19 July 2022 Sec. Radiation Detectors and Imaging)

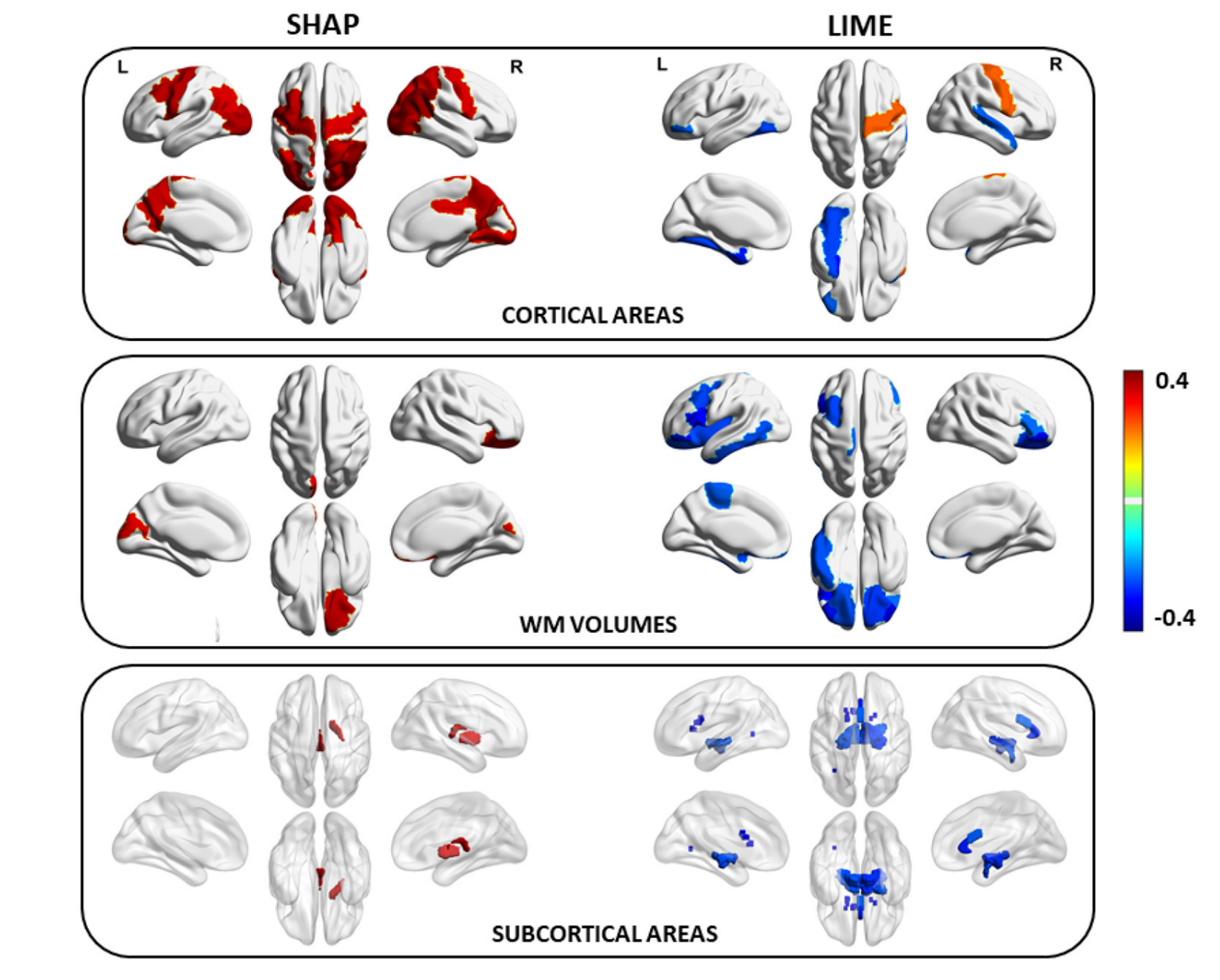

Explainable Artificial Intelligence (XAI)

With the names Explainable or Interpretable Artificial Intelligence we refer to a set of tools and techniques to make it possible for humans to understand the decision or the prediction of an algorithm. Explainable AI is of great importance in those fields and applications where humans have to build confidence over the predictions of the algorithms, notably in Medical Physics applications.

In this exercise we review the main ideas leading the development of the techniques used in Explainable AI, discussing simple use cases from the research for Medical applications.

(figure from Front. Neurosci., 28 May 2021 Sec. Brain Imaging Methods)